Building a Retrieval Augmented Generation System from Scratch

Learn to build a RAG system using Python from scratch. This guide covers document retrieval, augmentation and text generation.

In this post, I will introduce you to Retrieval Augmented Generation (RAG) and how to code it from scratch in Python. I will be using a LLM from Groq, register and get a free API key to follow along.

What is RAG?

Large Language Models are pretrained on huge sequential text data available on the Internet. While they are good at giving responses, they are not always accurate and they hallucinate. They also do not have access to contextual information.

To constrain the responses of a LLM, it is useful to provide a context for the LLM. This ensures that the LLMs responds within the context. RAG is method that helps in achieving this.

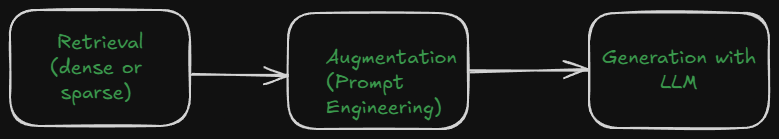

A RAG is culmination of three steps.

- Retrieval

- Augmentation

- Generation

In Retrieval , we will index a set of documents or databases that contain the context for the LLM.

In Augmentation , we will provide a prompt to the LLM that adds the context from the index or it will add any external information (could be structured data too) to the prompt.

In Generation, we will use the created prompt along with the context to generate a response to the user query.

Use Cases:

- Chatbots: RAGs can be used to make chats more precise and useful.

- Information Accessibility: RAGs enable non-technical people to interact with data.

- Mitigate Bias: Well designed RAG systems can help in mitigating underlying bias in the models.

Building a RAG from scratch

A backbone of a RAG is a search engine that takes a query and returns all the documents that match to the query.

Search or Information Retrieval is a huge topic.

Sparse Search

I will introduce a simple technique called as Tf-Idf scoring. It treats each document as a collection of words.

Give a query term (q), we iterate each document in the index and compute:

- Term Frequency: The number of times the query term appears in the document

- Document Frequency: Total number of times the query term appears in the entire corpus.

Score of the Document = Term Frequency / Document Frequency)

Higher scores indicate better match.

Dense Search

We can also encode a query as a vector using Sentence Transformers. While indexing, we create embeddings for each document and store them.

During query time, we compare the cosine similarity of query with each document in the index.

The following code contains a basic implementation of a search engine that can be used as a backbone for a RAG.

from collections import defaultdict

import re

import numpy as np

import torch

from tqdm.autonotebook import tqdm, trange

from sentence_transformers import SentenceTransformer

from sklearn.metrics.pairwise import cosine_similarity

class SearchEngine():

def __init__(self):

# Sparse index components

self.idf = defaultdict(float) # Inverse document frequency storage

self.doc_freqs = defaultdict(lambda: defaultdict(int)) # Document frequencies for tokens

self.num_docs = 0 # Track the number of documents

self.documents = [] # Store tuple of document id and tokenized document

# Dense index components

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') # Use CUDA if available

self.vector_model = SentenceTransformer('sentence-transformers/all-MiniLM-L6-v2', device=device) # Sentence transformer model

self.vector_index = defaultdict(list) # Dictionary to hold document vectors and their respective documents

def tokenize_document(self, doc):

# Tokenize the document into words

tokens = re(r'\b\w+\b', doc.lower())

return tokens

def add_document(self, document):

# Add a document to the index

doc_id = len(self.documents)

tokens = self.tokenize_document(document)

self.documents.append((doc_id, tokens)) # Store document ID and tokens

for token in tokens:

self.doc_freqs[token][doc_id] += 1 # Update token frequencies in the document

self.compute_idf() # Recompute IDF after adding the document

# Add the document vector to the dense index

vector = self.vector_model.encode(document)

self.vector_index['documents'].append(document)

self.vector_index['embeddings'].append(vector)

return {"message": "docs added to index"}

def compute_idf(self):

# Compute the Inverse Document Frequency (IDF) for each token

self.idf.clear()

for token, doc_ids in self.doc_freqs.items():

num_docs_with_token = len(doc_ids)

self.idf[token] = num_docs_with_token # Store IDF value for the token

def query_sparse_index(self, query_string, topk=5):

# Perform a search using the sparse index

query_tokens = self.tokenize_document(query_string) # Tokenize the query

scores = defaultdict(float) # Dictionary to hold scores for each document

for query_token in query_tokens:

if query_token in self.idf:

# Loop over all documents and compute scores based on term frequency and IDF

for i, (doc_id, tokens) in enumerate(self.documents):

term_frequency = self.doc_freqs[query_token][doc_id]

document_frequency = self.idf[query_token]

if document_frequency > 0:

score_contribution = term_frequency / document_frequency

scores[doc_id] += score_contribution # Update the document's score

# Sort the documents by score in descending order and fetch the top-k results

sorted_scores = sorted(scores.items(), key=lambda item: item[1], reverse=True)

top_k_results = [{'id': doc_id, 'score': score} for doc_id, score in sorted_scores[:topk]]

return top_k_results

def query_dense_index(self, query_string, topk=5):

# Perform a search using the dense index

query_embedding = self.vector_model.encode(query_string) # Encode the query

similarities = cosine_similarity(query_embedding.reshape(1, -1), np.array(self.vector_index['embeddings']))[0]

# Get the indices of the top-k most similar documents

top_indices = np.argsort(similarities)[-topk:][::-1]

results = [{'document': self.vector_index['documents'][i], 'score': similarities[i]} for i in top_indices]

return resultsself.doc_freqsstores the term frequencies,self.idfstores the document frequenciestokenize_documentdivides the document into individual wordsadd_documenttokenizes the document, adds the term frequencies to the index, computes vector embeddings, add the vector embeddings to the vector indexquery_sparse_indexqueries the index with the query term using term frequencies and document frequencies that are already computedquery_dense_indexwill query the vector index and return the top matching documents

Adding Documents to the Search Engine

We can now add documents to the search engine.

search_engine = SearchEngine()

documents = [

"Solar energy is a form of renewable energy that harnesses the sun's energy through photovoltaic cells or solar thermal systems. It is a clean and sustainable source of power, reducing reliance on fossil fuels.",

"Photovoltaic (PV) systems convert sunlight directly into electricity. PV systems can range from small, rooftop-mounted panels to large-scale installations feeding power into the grid.",

"Solar thermal systems capture and collect solar thermal energy for use in heating applications. This includes solar water heaters and industrial process heating.",

"Solar panels require minimal maintenance. However, periodic cleaning and occasional by professionals ensure their longevity and efficiency.",

"Solar energy is not only useful for generating electricity but also for heating water and space through concentrated solar power (CSP) systems."

]

for doc in documents:

search_engine.add_document(doc)Augmented Generation

Now, we have the documents of our knowledge base indexed in the search engine. Before generating a response from the LLM, we will augment it with a prompt. We will search for the best matching document to the user query and pass it on as a context to the LLM.

I will be using dense search and provide the top matching document as a context to the LLM.

from langchain_groq import ChatGroq

from prompt_poet import Prompt

llama_70b_llm = ChatGroq(api_key=groq_api_key,

temperature=0.5, model_name="llama3-groq-70b-8192-tool-use-preview")

def rag(query_string, search_type='dense'):

results = search_engine.query_dense_index(query_string,topk=1)

raw_template = """

- name: system instructions

role: system

content: |

You are a helpful assistant, be concise and precise in your answers.

If you don't know answer to a specific user query, always answer with 'I don't know'.

If you know anything about the query, incorporate that knowledge while responding.

- name: user query

role: user

content: |

Please use the context to generate your answer.

Context: {{ context }}

Query: {{ query }}

"""

print("Context: {res}".format(res=results[0]['document']))

template_data = {"context": results[0]['document'],"query": query_string}

prompt = Prompt(

raw_template=raw_template,

template_data=template_data

)

response = llama_70b_llm.invoke(prompt.messages)

return response.contentFirst let us see what happens if we don't use the RAG.

def generate_text(prompt):

# Construct a prompt for the LLM

response = llama_70b_llm.invoke(prompt)

return response.content

# Example usage

prompt = "What is solar energy?"

generated_response = generate_text(prompt)

print("Generated Response:", generated_response)

Generated Response: Solar energy is the radiant energy emitted by the sun. It is harnessed using various technologies such as solar panels, which convert sunlight into heat or electricity. It is a renewable energy source and is considered clean since it does not produce greenhouse gases that contribute to climate change.

Now, when we ask a question to the the LLM it will use the context document while generating response.

rag("What is solar energy?")Context: Solar energy is a form of renewable energy that harnesses the sun's energy through photovoltaic cells or solar thermal systems. It is a clean and sustainable source of power, reducing reliance on fossil fuels.

"Solar energy is a form of renewable energy that harnesses the sun's energy through photovoltaic cells or solar thermal systems. It is a clean and sustainable source of power, reducing reliance on fossil fuels."If we ask something out of context, it will not respond.

rag("Who trained you?")I don't knowThat's it, it is not very complicated.

We implemented a basic search engine and a RAG system from scratch using Python. While this is a simple implementation, it gives a solid foundation for building complicated architectures on top of it.